TL;DR

- Code optimization refers to making C/C++ programs run faster and use less memory without altering their functionality.

- It’s used to reduce the computational cost of a program by eliminating redundant operations, improving data locality, simplifying branching, and tightening the critical code path, all while maintaining identical output.

- Optimization happens at different levels: algorithmic, compiler, memory, and runtime.

- Well-optimized code reduces hardware costs, power consumption, and response time, resulting in smoother and more efficient applications for users.

- For C/C++ developers, optimization means shaping the code so the CPU, memory subsystem, and compiler can execute it efficiently — not changing the logic, but reducing the number of cycles, allocations, and stalls required to run it.

Introduction: Meaning of Code Optimization & Its Importance in Real Systems

Writing C/C++ means you’re operating close to the metal you choose, whether data lives on the stack or heap, how objects are laid out in memory, whether something is passed by value or reference, and how often allocations happen. That level of control is powerful, but it also means the compiler and CPU will do exactly what your code expresses, even if it’s wasteful for the hardware underneath.

Optimization in C/C++ isn’t about shaving milliseconds; it’s about removing the hidden costs created by your data layout, your memory access pattern, and the number of instructions your tight loops actually generate. When a loop performs redundant work, or when your algorithm forces the CPU to fetch memory in a non-contiguous pattern, you’re not just losing performance; you’re burning cache bandwidth, causing pipeline stalls, and creating jitter that users actually feel.

Let’s understand why optimization is essential in real-world systems by using the example of a mobile app that applies an image-processing filter.

Let’s understand why optimization is essential in real-world systems by using the example of a real-time signal-processing module that performs an FFT (Fast Fourier Transform) on continuous sensor data.

Imagine you’re building a real-time audio, radar, or sensor-processing system where the FFT runs continuously on incoming data. Here’s a version of the FFT kernel that produces correct results but performs poorly on real hardware due to poor memory access, repeated computations, and unpredictable branching.

FFT Code That Produces the Right Output but Destroys Performance

// Naive FFT implementation

for (int stage = 0; stage < logN; stage++) {

int step = 1 << stage;

for (int i = 0; i < N; i += 2 * step) {

for (int j = 0; j < step; j++) {

// Recomputing sine/cosine every time

float angle = -2.0f * M_PI * j / (2 * step);

float w_real = cosf(angle);

float w_imag = sinf(angle);

int idx1 = i + j;

int idx2 = idx1 + step;

float xr = real[idx2];

float xi = imag[idx2];

float tr = w_real * xr - w_imag * xi;

float ti = w_real * xi + w_imag * xr;

real[idx2] = real[idx1] - tr;

imag[idx2] = imag[idx1] - ti;

real[idx1] = real[idx1] + tr;

imag[idx1] = imag[idx1] + ti;

}

}

}Why This Implementation Collapses on Real Hardware

The FFT kernel is logically correct, but the performance behaviour is disastrous.

Each butterfly operation jumps across distant memory locations, because idx1 and idx2 are separated by a stride that grows every stage. This forces the CPU to repeatedly load new cache lines, resulting in hundreds of thousands of avoidable L1/L2 misses even for moderately sized FFTs.

The implementation also recomputes sine and cosine values in the tightest loop, even though they will be reused thousands of times across different stages. These trig functions are slow, pipeline-heavy, and block auto-vectorization completely.

Additionally, storing the real and imaginary parts in separate arrays (float real[], float imag[]) destroys spatial locality and prevents the compiler from efficiently using SIMD registers. And because of unpredictable conditional logic in real implementations (bounds, bit-reverse, handling odd stages), the CPU suffers repeated branch mispredictions that flush the pipeline.

Functionally, the FFT is correct — but on real hardware, it stalls pipelines, burns cache bandwidth, blocks SIMD, and runs far slower than necessary.

Optimized FFT Using Precomputed Twiddle Factors and Interleaved Complex Layout

Once we analyse why the naive FFT is slow, the underlying issues become obvious: repeated trigonometric evaluation, scattered memory traversal, branch-heavy loops, and data layouts unfriendly to modern CPUs.

Modern FFT implementations (FFTW, KissFFT, DSP libraries) solve this using precomputed twiddle tables, interleaved complex arrays, and predictable, branchless butterfly loops

// Precompute twiddle factors once

for (int k = 0; k < N/2; k++) {

twiddle[k].r = cosf(-2 * M_PI * k / N);

twiddle[k].i = sinf(-2 * M_PI * k / N);

}

// Interleaved complex number layout

typedef struct { float r, i; } Complex;

Complex data[N];The updated memory structure allows for contiguous loads, predictable iteration patterns, and direct SIMD vectorization.

Why the Optimized FFT Outperforms the Naive Version at Every Level

1. Contiguous Memory Access That Works With the CPU, Not Against It

// Optimized interleaved complex layout

typedef struct { float r, i; } Complex;

Complex data[N];

float xr = data[idx2].r;

float xi = data[idx2].i;The optimized FFT reorganizes real and imaginary values into interleaved complex structures, ensuring that all butterfly operands lie next to each other in memory. This allows the CPU to traverse memory linearly instead of jumping unpredictably between arrays.

When data is contiguous, each 64-byte cache line fetch returns multiple values that are used immediately, drastically improving spatial locality. Hardware prefetchers can now accurately predict upcoming accesses, reducing L1/L2 misses and avoiding expensive DRAM fetches.

In contrast, the naive FFT jumps across memory with a stride that changes at each stage, evicting useful cache lines and causing thousands of needless stalls. This simple layout change ensures far better cache utilization and smoother CPU execution.

2. Eliminating Redundant Work Shrinks Computation to the Bare Minimum

// Precompute twiddle factors once

for (int k = 0; k < N/2; k++) {

twiddle[k].r = cosf(-2 * M_PI * k / N);

twiddle[k].i = sinf(-2 * M_PI * k / N);

}

// Load during FFT (no more sin/cos inside loop)

Complex w = twiddle[j * stride];In the naive implementation, sine and cosine values are recomputed in the tightest loop of the FFT. These trigonometric calls are extremely expensive and become the dominant cost of the entire algorithm.

The optimized approach moves this computation outside the FFT loop entirely by precomputing twiddle factors once and storing them in a table. Inside the FFT, the algorithm then simply loads these precomputed values.

This transforms the butterfly into a lightweight arithmetic loop consisting of multiplications and additions. Because the twiddle table is contiguous, many of these values reside in cache, making the butterfly stage significantly faster and far more predictable.

3. Removing Branches Makes Pipelines Predictable and Efficient

// Branchless butterfly loop

for (int stage = 0; stage < logN; stage++) {

int step = 1 << stage;

for (int i = 0; i < N; i += 2 * step) {

for (int j = 0; j < step; j++) {

Complex w = twiddle[j * stride];

Complex x1 = data[i + j];

Complex x2 = data[i + j + step];

float tr = w.r * x2.r - w.i * x2.i;

float ti = w.r * x2.i + w.i * x2.r;

data[i + j].r = x1.r + tr;

data[i + j].i = x1.i + ti;

data[i + j + step].r = x1.r - tr;

data[i + j + step].i = x1.i - ti;

}

}

}Branch misprediction is one of the biggest hidden performance killers in tight loops. Many naive FFT implementations include conditions for bit-reversal ordering, stage boundaries, or index corrections. These branches appear small but frequently mispredict, flushing the CPU pipeline and forcing expensive re-fetches of instructions.

The optimized FFT avoids this entirely by restructuring loops so that all boundary or index adjustments are computed before entering the butterfly loop. The butterfly itself becomes pure arithmetic with zero branching.

This gives the CPU a perfectly predictable instruction path that can be pipelined aggressively and vectorized using SIMD instructions. The result is stable, deterministic performance — crucial for real-time DSP, audio, radar, and embedded systems.

What Optimization Really Means in Software Engineering

In real C++ systems, optimization has nothing to do with “making code fast” in a superficial way. It’s about removing structural inefficiencies, unnecessary allocations, repeated scans, cache-unfriendly access, and unpredictable control flow that silently tax every core in your system. When you’re parsing millions of log lines or running a high-frequency backend service, the wrong data layout or algorithm doesn’t just slow things down; it causes CPU spikes, tail-latency jumps, allocator contention, and throughput collapse under load.

A good C++ optimization pass starts with measurement: you identify where the CPU is actually spending time, then analyze the algorithm and memory behaviour in those hotspots. And in most real systems, the bottleneck isn’t arithmetic, it’s memory traffic, string copies, heap churn, and unpredictable scanning patterns.

Let’s see this clearly through a real backend example.

A Real Backend Example: The Hidden Cost of a Simple userId Parser

// Processes each log line and extracts a key field repeatedly

string extractUserId(const string& logLine) {

size_t pos = logLine.find("userId=");

if (pos == string::npos) return "";

pos += 7;

size_t end = logLine.find(' ', pos);

return logLine.substr(pos, end - pos);

}

void processLogs(const vector<string>& logs) {

for (const auto& line : logs) {

string userId = extractUserId(line);

handleUser(userId);

}

}The unoptimized version looks harmless, but it creates a chain of inefficiencies that compound at scale. Each call to find() rescans the string from the beginning, even though the log format is fixed, turning millions of lines of simple parsing into something closer to O(n²) behaviour.

The situation worsens because substr() allocates a new string for every extracted userId, pushing work onto the heap allocator within the loop. In a high-throughput backend where multiple threads process tens of thousands of logs per second, this allocation churn quickly becomes a bottleneck.

Every extracted userId also performs a fresh copy of characters into a new buffer, which not only consumes CPU cycles but also scatters small allocations throughout the heap. This destroys spatial locality, drives up L1/L2 cache misses, and forces the CPU to fetch data from slower memory levels far more often than necessary.

None of this appears in the high-level logic, but the hardware pays the price for every mistake: latency spikes in the 99th percentile, sudden CPU saturation, allocator lock contention, and a general drop in throughput as the traffic load increases. The code is functionally correct, but architecturally hostile; it constantly conflicts with the CPU, cache, and memory subsystems, rather than working in harmony with them.

This code works correctly, but its performance does not scale.

Note: Before we dive into the optimized version, let’s first understand what string_view is.

string_view is a lightweight, non-owning view of a string.

Unlike a string, it doesn’t store or copy the actual characters—it simply holds a pointer to an existing string and a length.

This makes it extremely fast and memory-efficient

How Zero-Copy Parsing Eliminates the Fundamental Bottleneck

The real issue with the unoptimized log parser isn’t that the code looks cluttered; it’s that the underlying algorithm fundamentally fights the hardware. Each call to extractUserId() performs a fresh linear scan over the log line, allocates a new heap buffer, and copies bytes into it. When this runs across millions of log entries, the cost compounds into a classic O(n·m) pattern (n = logs, m = log length). At production scale, this behaves indistinguishably from quadratic work, which is why CPU usage spikes and tail latencies explode the moment throughput increases.

The fix isn’t “call a faster function”; it requires changing the parsing strategy entirely. Instead of slicing strings and allocating memory for each field, we use a zero-copy approach: compute the field boundaries and return a lightweight reference to the original buffer. In C++, this maps directly to std::string_view, which is a non-owning pair of a pointer and length. It gives constant-time substring extraction with no allocations, no copying, and no cache churn.

Once we adopt this algorithmic change, the optimized path basically writes itself. The parser walks the log line once to locate the userId marker, identifies the closing delimiter, and returns a string_view that refers to memory already in the L1 cache. There are no heap allocations, no memory scattering, and no repeated scans. The logic and output remain identical, but the performance profile shifts from allocate-per-log to boundary-compute-per-log, reducing CPU cost by an order of magnitude and eliminating the allocator-driven jitter that appears in real-world 99th-percentile latency.

string_view extractUserIdView(const string& logLine) {

string_view view(logLine);

size_t pos = view.find("userId=");

if (pos == string::npos) return {};

pos += 7;

size_t end = view.find(' ', pos);

return view.substr(pos, end - pos); // zero-copy

}

void processLogsOptimized(const vector<string>& logs) {

for (const auto& line : logs) {

string_view userId = extractUserIdView(line);

handleUserFast(userId);

}

}Why This Optimization Works

The optimized version works because it removes the two most significant bottlenecks in the original algorithm: repeated scans and heap allocations. Instead of creating a new std::string for every userId, the parser now returns a std::string_view, which is just a pointer + length referencing the original buffer. That means no copying, no heap traffic, and no cache scattering.

The algorithm also switches from “scan the string multiple times” to “scan once and slice,” which dramatically reduces the number of instructions executed in the loop. By keeping all accesses inside the same contiguous log-line buffer, the CPU stays inside L1/L2 cache, eliminating the random misses caused by allocating thousands of small strings.

Why Code Optimization Is a Core Requirement for C/C++ Developers

C and C++ are used in environments where performance directly impacts the system's functionality, from embedded controllers and game engines to backend services and desktop applications. Because these languages expose low-level control, even a slight inefficiency in a loop, memory allocation, or data structure can multiply into real-world problems when the code runs thousands or millions of times. Optimizing C/C++ code ensures that the program produces the same output while doing so with less work, fewer memory operations, and more predictable execution.

Let’s explore the key reasons why optimization plays such a vital role in C and C++ development.

Reducing Execution Time

A significant goal of optimization is to reduce the time it takes for a piece of code to run. In C++, this often means removing redundant operations within loops, avoiding expensive functions, or switching to a faster algorithm while maintaining the same behaviour.

For example, many signal-processing or telemetry systems apply transformations to millions of samples per second:

A single sin() call is slow, so removing it from a loop significantly reduces CPU time.

Systems like audio engines, radar pipelines, and communication stacks rely on such improvements to maintain real-time throughput.

// Unoptimized: costly math inside tight loop

for (float& v : samples)

v = sin(v) * 0.5f + 0.25f;

// Optimized: replace slow trig math with lookup table

for (float& v : samples)

v = sinTable[(int)v];Minimizing Memory Usage

Memory use affects performance, stability, and power consumption — especially in C/C++, where developers manually control allocations. Unnecessary allocations, resizing, and fragmentation often degrade performance over time.

A widespread optimization involves reserving capacity ahead of time:

// Unoptimized: vector grows repeatedly

vector<int> data;

for (int i = 0; i < n; i++)

data.push_back(i);

// Optimized: allocate space once

vector<int> data;

data.reserve(n);

for (int i = 0; i < n; i++)

data.push_back(i);By reserving memory once, the program avoids repeated reallocation and copying.

This is especially useful in systems that build large buffers, such as log collectors, data acquisition modules, or graphics meshes, where memory growth occurs frequently.

Improving Scalability and Responsiveness

Optimization often reduces delays that users can feel, even if the program is technically “fast.” Responsiveness matters for UI frameworks, tooling, and any application that interacts directly with the user.

Consider a typical GUI application using Qt or a custom C++ engine:

// Unoptimized: heavy work blocks UI thread

void onClick() {

auto result = performAnalysis(); // long computation

updateUI(result);

}

// Optimized: perform work on background thread

void onClick() {

async([] { return performAnalysis(); })

.then([](auto result) { updateUI(result); });

}Meeting Real-Time System Requirements

In embedded and robotics systems, code must complete within strict time limits. A delay of even a few milliseconds can destabilize the system.

// Unoptimized: allocation in a 1000 Hz control loop

void loop() {

vector<float> buf;

buf.push_back(readSensor());

compute(buf);

}

// Optimized: fixed buffer reused

static float buf[1];

void loop() {

buf[0] = readSensor();

compute(buf);

}Allocating memory inside a real-time loop introduces unpredictable timing.

This is dangerous in automotive ECUs, drones, medical devices, and aerospace systems, where timing stability is critical.

Enhancing User Experience

Many C++ applications — mainly games and interactive tools — run thousands of updates per second. The data structure used can make a huge difference in how smooth the output feels.

// Unoptimized: linked list causes cache misses

list<Entity> entities;

// Optimized: vector improves cache locality

vector<Entity> entities;Both store entities, but the vector is contiguous in memory, making iteration dramatically faster.

Game engines, such as Unreal Engine and custom in-house engines, rely heavily on these patterns to avoid frame-time spikes.

Reducing Operational Costs

Poorly optimized code consumes more CPU time per request. In backend C++ services, this means you need more machines to handle the same load.

// Unoptimized: copies string on every request

string user = req.user();

// Optimized: no copy needed

string_view user = req.userView();For systems handling tens of thousands of requests per second — such as trading systems, analytics pipelines, or authentication servers — removing copies and reducing per-request work can significantly cut CPU usage, thereby lowering infrastructure costs.

Why Embedded C/C++ Code Optimization Is More Essential Than Ever

Embedded systems today are responsible for far more computation than they were a decade ago. Automotive ECUs must coordinate safety-critical subsystems, medical devices must process continuous sensor data, drones must maintain real-time flight control, and IoT nodes must make decisions locally under severe energy limits. All of this must run on hardware that is intentionally constrained in CPU performance, memory capacity, and power consumption.

Because C and C++ are the primary languages used in these environments, the way code is written and optimized directly determines whether the system meets its timing guarantees, stays within its power envelope, and avoids unnecessary hardware cost. In embedded contexts, optimization is not about making code “faster”—it is about ensuring deterministic execution, reducing jitter, minimizing memory usage, and enabling reliable operation on limited microcontroller architectures.

The Explosion of Embedded Complexity

Modern embedded devices must handle workloads that are significantly more complex than those of traditional systems. Today’s controllers integrate wireless communication stacks, cryptographic routines, real-time data pipelines, and even lightweight machine-learning inference — all running on hardware that is smaller, more power-constrained, and far less forgiving than general-purpose processors. The result is a steep rise in computational demand without a proportional increase in available resources.

A single vehicle now contains more software than early desktop operating systems. Modern cars are projected to exceed 650 million lines of code by 2025, with ADAS modules performing continuous processing across cameras, radar, and lidar sensors. IoT devices must simultaneously handle communication protocols, secure data handling, local processing, firmware updates, and telemetry — all within kilobytes of RAM and tight energy budgets. As software grows, CPU cycles, RAM usage, and flash footprint expand rapidly, but hardware cannot scale at the same rate, especially when shipping millions of units where every cent of cost matters. (See the citation :”Software Is Taking Over the Auto Industry”).

Why Optimization Matters Here

In this environment, every inefficient loop, unnecessary dynamic allocation, or avoidable memory copy directly impacts hardware cost and system capability. If the software becomes heavier, the only alternative is to move to a more expensive microcontroller — increasing BOM cost across tens or hundreds of thousands of devices. Efficient, well-optimized C/C++ code reduces CPU load, shrinks memory usage, and enables these increasingly sophisticated workloads to run reliably on smaller, more affordable embedded chips.

Example: Heavy vs Optimized Sensor Fusion Loop

// Unoptimized: redundant checks & operations in every iteration

for (auto& s : sensors) {

if (s.isActive()) // repeated condition

processSensor(s.data); // expensive processing

}

// Optimized: pre-filter active sensors once

vector<Sensor*> active;

for (auto& s : sensors)

if (s.isActive()) active.push_back(&s);

for (auto* s : active)

processSensor(s->data);This loop runs thousands of times per second in ADAS systems.

Minor improvements compound into significant reductions in CPU load.

Real-Time Constraints and Deterministic Behaviour

Most embedded systems run under strict real-time constraints. The code must not only produce the correct output, but must do so within a fixed and guaranteed execution window. This is the defining difference between general software and embedded C/C++ systems. Automotive ECUs must close control loops every millisecond, ADAS pipelines must process sensor data continuously without stalling, avionics autopilot logic must maintain precise timing, medical infusion devices must update dosage calculations predictably, and drone stabilization loops must react within a few milliseconds to maintain flight stability. In these systems, a single missed deadline can cause the system to enter an unsafe or unstable state.

Consider a 1 kHz flight-control loop. If a heap allocation occurs inside that loop, the allocation cost varies depending on fragmentation, the allocator's state, and previous memory usage. That variability—called allocation jitter—causes uneven timing across iterations. Even a one-time delay creates drift, oscillation, or unstable actuator output. This is why real-time optimization focuses aggressively on eliminating nondeterministic behaviour: removing allocations, cutting unpredictable branches, minimizing memory copies, and restructuring loops so execution time is stable and repeatable on every cycle.

If a loop overruns its time budget even once, the system may behave unpredictably.

Example: Allocation Jitter in a 1 kHz Loop

// Unoptimized: unpredictable timing due to heap allocation

void controlLoop() {

vector<float> samples; // allocates every iteration

samples.push_back(readIMU());

samples.push_back(readGyro());

updateMotors(samples);

}

// Optimized: fixed-size static buffer (predictable timing)

static float samples[2];

void controlLoop() {

samples[0] = readIMU();

samples[1] = readGyro();

updateMotorsFixed(samples);

}In the unoptimized version, the control loop allocates a new vector<float> on every iteration. Even though the vector is small, each creation triggers a heap allocation, and the time required for this allocation is never constant—it depends on factors such as fragmentation, the allocator's state, and the previous allocation history. This variability introduces timing jitter, meaning the loop sometimes completes in 80 µs, sometimes in 120 µs, and sometimes in 200 µs. For a 1 kHz control loop, that unpredictability is dangerous. This results in inconsistent actuator updates, drift in stabilization logic, and unstable behaviour in systems such as drones, robots, and ECUs.

The optimized version replaces the dynamic vector with a fixed-size static buffer. There are zero heap allocations and no calls to push_back(), so the timing per iteration becomes perfectly consistent. Every loop executes the exact same number of instructions with the exact same memory accesses. This eliminates allocator jitter, guarantees deterministic execution, and ensures that the control loop always meets its timing budget.

Energy Efficiency and Sustainability

Embedded devices often operate on extremely constrained power budgets. IoT sensors must run for months on coin-cell batteries, electric vehicles contain dozens of ECUs competing for limited power, drones need every extra minute of flight time, and wearables must operate on micro-batteries. In these environments, every unnecessary instruction, memory access, cache miss, and stall translates directly into wasted energy.

Optimized code reduces power consumption for a simple reason: hardware draws power in proportion to the number of cycles, the number of memory operations, and the duration of the microcontroller's active state. When the CPU executes fewer instructions, performs fewer DRAM accesses, and maintains good data locality, it completes work faster and spends more time in low-power sleep states—this reduction in active time results in significant energy savings. For battery-powered meters, EV battery-management systems, onboard drone computation, and solar-powered IoT devices, even a slight reduction in per-cycle execution time can extend operational life by hours or days.

// floating point math is expensive on MCUs without FPU

float speed = (pulseCount * 0.032f) / 3.6f;

// Fixed point math (much cheaper on embedded CPUs)

int speed = (pulseCount * 32) / 3600;On microcontrollers without an FPU (Floating Point Unit), every floating-point operation is executed through slow software emulation. A single float multiply or divide can take dozens to hundreds of cycles, compared to a few cycles for integer math. In tight control loops—like motor control, encoder processing, PWM updates, or sensor fusion—these extra cycles accumulate and can cause missed deadlines.

By converting the floating-point computation:

float speed = (pulseCount * 0.032f) / 3.6f;Into its fixed-point equivalent:

int speed = (pulseCount * 32) / 3600;The loop avoids all floating-point instructions. The MCU performs only integer multiplies and divides, which are significantly faster and fully deterministic on low-end embedded CPUs.

WedoLow Deep-Tech Approach to Embedded Code Optimization

Embedded systems are scaling in complexity far faster than microcontroller performance is improving. Whether it’s an automotive ECU, a flight-critical avionics module, or a medical-grade controller, modern embedded software demands deterministic execution on hardware that is increasingly constrained.

Instead of relying on manual, inconsistent tuning, WedoLow applies a hardware-aware, semantics-preserving, verification-driven optimization pipeline that transforms C/C++ into deterministic, real-time-compliant code — without altering functional behaviour.

This is not just faster execution.

This is predictable, cycle-bounded, certifiable embedded performance.

Beyond Manual Tuning

Traditional optimization methods assume that engineers can manually inspect loops, reason about bottlenecks, minimize memory churn, and stabilize execution timing through code-level intuition. This assumption collapses under modern embedded workloads. Contemporary firmware is too large, too timing-sensitive, and too tightly coupled to microarchitectural behaviour for manual tuning to remain reliable. Real-time performance is governed by interactions between caches, pipelines, memory buses, interrupt latency, and scheduling windows that are not visible from source code alone. As a result, manual tuning cannot guarantee deterministic behaviour, worst-case execution bounds, or system-level timing stability in today’s embedded environments.

Why Manual Tuning Fails

In C and C++, real-time failures almost never originate from the high-level logic visible in the source code. They arise from microarchitectural interactions that the language does not expose: cache evictions caused by non-contiguous data layouts, pipeline stalls due to dependency chains, misaligned or uncoalesced memory accesses, allocator churn from hidden dynamic allocations, branch mispredictions triggered by irregular control flow, and ISR interference that perturbs execution windows. These behaviours depend on how the compiled machine code interacts with the CPU’s caches, pipelines, and memory subsystem — not on how the source code “looks.” Because standard C/C++ code inspection provides no visibility into these hardware-level effects, manual tuning cannot reliably detect or predict them. WedoLow models these interactions directly, enabling the precise detection of timing hazards that humans and static code reviews cannot see.

Why WedoLow Is Different

WedoLow is not a linter, a compiler switch, or a static analysis add-on. It is a deep-tech, semantics-aware transformation system that operates at a level far beyond conventional tooling. Instead of scanning for surface-level patterns, WedoLow constructs a hardware-accurate model of the program—understanding its semantic intent, data-flow relationships, memory layout, real-time timing constraints, microcontroller architecture, and behaviour-equivalence boundaries. This enables it to transform C/C++ code in a manner that remains mathematically correct while aligning execution with the underlying hardware. The result is deterministic, cycle-stable, architecture-optimized embedded code that traditional compilers and human-driven tuning simply cannot produce.

The WedoLow Pipeline

WedoLow’s optimization pipeline is designed to reflect how embedded performance must be engineered: mathematically, predictably, and with full hardware awareness. The system follows a four-stage process—Analyze, Optimize, Generate, and Verify—producing deterministic, architecture-aligned, and behaviour-preserving C/C++ code.

In the Analyze stage, WedoLow builds a deep, hardware-accurate model of the program using a combination of static and dynamic analysis. It understands control flow, data flow, loop structures, instruction costs, memory access patterns, jitter sources, and worst-case execution times. This allows it to detect issues that are invisible in source code, such as expensive floating-point operations on MCUs without FPU support, redundant instructions within hot loops, unpredictable branching, scattered or non-linear memory access, DRAM-intensive operations that drive power consumption, heap fragmentation paths, and timing drift within real-time sensor and actuator loops. Work that would typically require a hardware engineer, a compiler engineer, and a senior embedded architect is fully automated.

The Optimize stage applies transformations guided by real hardware constraints. WedoLow reasons about MCU architecture, cache behaviour, bus bandwidth, FPU availability, memory pressure, and real-time deadlines to restructure code into a form that executes efficiently while preserving semantic behaviour. Every transformation is constraint-aware and aligns the generated machine behaviour with the capabilities and timing windows of the underlying microcontroller.

The Optimize stage applies C/C++ transformations that are guided directly by real hardware constraints. WedoLow examines how the original code uses the MCU’s architecture—its cache hierarchy, memory layout, bus bandwidth, FPU capabilities, and real-time deadlines—and restructures the C/C++ implementation so it executes more efficiently while preserving its semantic behaviour, meaning it produces the same logical results even after optimization. All transformations are constraint-aware and ensure that the generated machine code aligns with the microcontroller’s timing windows, memory pressure, and instruction-level capabilities. Some optimizations use controlled approximations that can slightly reduce numerical precision; for these cases, we report the Signal-to-Noise Ratio (SNR) so engineers can judge whether the accuracy trade-off is acceptable. We do not guarantee compliance with standards such as MISRA, so the optimized C/C++ code must still be reviewed by an engineer and verified using static-analysis or compliance tools before deployment.

In Generate, WedoLow emits clean, deterministic, human-readable C/C++ that maintains strict behavioural equivalence with the original implementation. The output exhibits predictable, cycle-bound execution, stable control flow suited for real-time analysis, and consistent data-flow semantics. The generated code avoids macro-heavy expansions that hinder compiler reasoning, IR-style constructs unsuitable for verification, and opaque compiler-generated patterns that complicate timing validation and safety certification.

Finally, the Verify stage checks that the optimized code remains mathematically consistent with the original implementation and behaves correctly under the project’s real-time constraints. WedoLow compares inputs and outputs to validate functional consistency, and analyzes execution behaviour within the expected timing window. Loop frequencies (1 kHz, 10 kHz, or custom rates) are evaluated, stack usage is measured, unexpected heap allocations are flagged, pointer aliasing is inspected, and potential data-race issues are highlighted. Verification is performed using the compiler and build commands provided by the developer, ensuring the analysis reflects the real build environment.

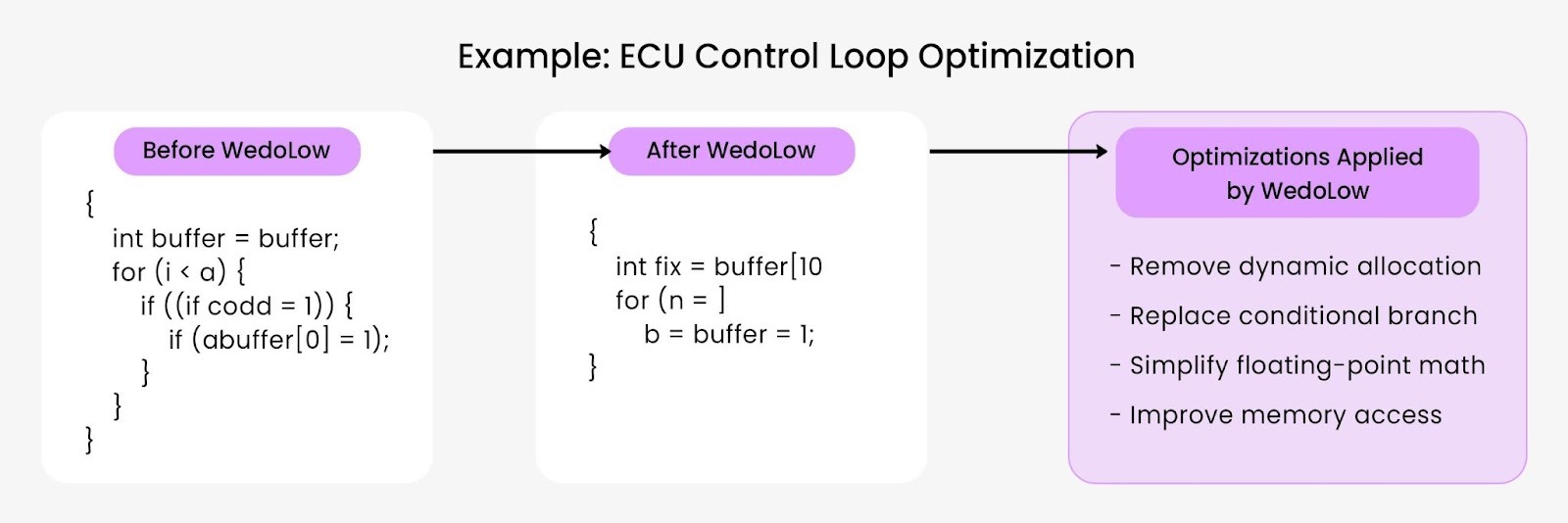

Example: ECU Control Loop Optimization (Using WedoLow)

The following is a realistic example of an ECU loop from an automotive system.

Before WedoLow:

void updateECU(const vector<float>& sensors) {

vector<float> filtered; // unpredictable heap allocation

for (float s : sensors) {

if (s > 0.01f) { // unpredictable branch

float v = applyLowPass(s);

filtered.push_back(v);

}

}

float torque = computeTorque(filtered);

sendToActuator(torque);

}This ECU loop is susceptible to several real-time performance hazards. The creation of a vector<float> inside the function triggers heap allocations, which introduce jitter because allocation time is not deterministic. The if condition inside the loop creates an unpredictable branch, increasing the chance of branch misprediction in a hot path. Each iteration performs floating-point operations, which are costly on MCUs that lack an FPU. The use of push_back() leads to fragmented and non-linear memory access, harming cache behaviour. Combined with the conditional control flow, the loop becomes non-deterministic, making it challenging to guarantee execution time at a 1 kHz control-loop frequency. These factors, together, create a realistic risk of missing real-time deadlines.

After WedoLow:

static float buffer[32];

size_t count = 0;

void updateECU(const vector<float>& sensors) {

count = 0;

for (float s : sensors) {

float v = applyLowPassFast(s); // simplified arithmetic path

buffer[count++] = v; // contiguous writes

}

float torque = computeTorqueDeterministic(buffer, count);

sendToActuatorFixed(torque);

}Optimizations Applied by WedoLow

WedoLow eliminates every source of nondeterminism in the loop. All dynamic allocations are removed, replacing the vector with a fixed-size buffer to ensure constant-time memory usage. The conditional branch inside the loop is replaced with straight-line logic, preventing branch misprediction and ensuring entirely predictable execution. The math path is simplified to reduce floating-point costs, especially on MCUs without FPU support. Memory writes are linearised and contiguous, improving cache locality and eliminating fragmentation. With a fixed iteration path and bounded operations, the loop now has a guaranteed worst-case execution time, ensuring that the ECU’s 1 kHz timing window remains stable in every cycle.

Conclusion: The Future of Embedded Code Optimization

Embedded systems are evolving faster than traditional optimization techniques can keep up. Modern ECUs, drones, medical devices, and IoT nodes now execute workloads once reserved for desktop systems — but on processors with tighter memory, power, and timing constraints. This shift makes optimization not a “performance improvement task,” but a core engineering requirement.

The next frontier of embedded performance is deep, intelligent code optimization — optimization that understands hardware behaviour, respects real-time constraints, eliminates unpredictable execution paths, and ensures correctness. Manual tuning cannot achieve this level of precision at scale.

WedoLow represents this new phase. By combining AI analysis, compiler-level intelligence, and hardware-aware transformation, it generates C/C++ code that is faster, more deterministic, and mathematically verified to match the original behaviour. Whether it’s a microcontroller loop running at 1 kHz or avionics control software running under strict timing windows, WedoLow pushes embedded C/C++ to the limits of what the hardware can deliver.

From automotive safety systems to mission-critical aerospace software, deep tech meets deep optimization — enabling predictable performance, lower power usage, and safer real-time execution for the next generation of embedded systems.

FAQs

What makes embedded code optimization different from general optimization?

Embedded code optimization is designed for systems with limited hardware and strict real-time, deterministic requirements. It focuses on meeting tight memory, power, and timing constraints while processing continuously updated sensor data. The system must solve minor optimization problems in real-time to produce accurate decisions.

General-purpose systems have more resources, so optimization mainly improves speed and efficiency—not strict timing guarantees.

How does WedoLow ensure functional equivalence after optimization?

WedoLow preserves the exact behaviour of the original C/C++ code across its entire optimization pipeline. It models control flow, data flow, pointers, and memory layout to maintain full C/C++ semantic correctness. Static analysis prevents logic or ordering changes, while dynamic validation checks real workloads for identical outputs. Deterministic verification ensures ISR timing, deadlines, and WCET boundaries remain intact. This ensures that the optimized code remains mathematically and functionally equivalent.

Can code optimization reduce hardware costs?

Yes. Optimized C/C++ code reduces CPU cycles, minimises memory access, and lowers RAM/Flash usage—enabling manufacturers to select smaller, more cost-effective microcontrollers without compromising performance. Reducing computational load also lowers required clock frequency and power consumption. In high-volume products, such as automotive ECUs or IoT devices, even a slight MCU downgrade can result in significant hardware savings. Deep optimization increases usable hardware headroom without requiring silicon upgrades.

What’s the measurable ROI of deep code optimization?

The ROI appears in both technical and financial gains. Deeply optimized C/C++ code can often reduce CPU load by 20–60%, enabling longer battery life and lower power consumption. Smaller memory footprints allow more cost-efficient hardware. Eliminating jitter improves control stability and reliability. Because WedoLow generates deterministic, cycle-bounded code, teams spend far less time profiling or debugging timing issues. This shortens validation, accelerates certification, and reduces engineering and hardware costs.

.svg)

.svg)