AI-Generated Code Alone Isn’t Enough

AI coding assistants like GitHub Copilot, Claude, and ChatGPT promise speed and productivity. Yet for embedded developers, “almost right” code isn’t enough:

- Real-time deadlines cannot be missed

- Memory and CPU cycles are strictly limited

- Hardware-specific optimizations are essential

- Safety-critical systems cannot tolerate bugs

Even with AI, developers often spend more time fixing code than writing it, especially in embedded projects where constraints are strict.

Why the Embedded Industry Needs a Game-Changer

The embedded software market is growing rapidly, and AI adoption is accelerating:

- GitHub Copilot: 20M+ users, 50,000 enterprise customers

- Claude Code: 115K+ developers generating 195M lines of code weekly

Yet adoption comes with paradoxes – AI accelerates coding, but 66% of developers report spending extra time fixing “almost-right” code, with 45% frustrated by AI solutions that fail under real-world constraints.

The critical question: How can AI-generated code meet embedded-specific performance, safety, and hardware requirements?

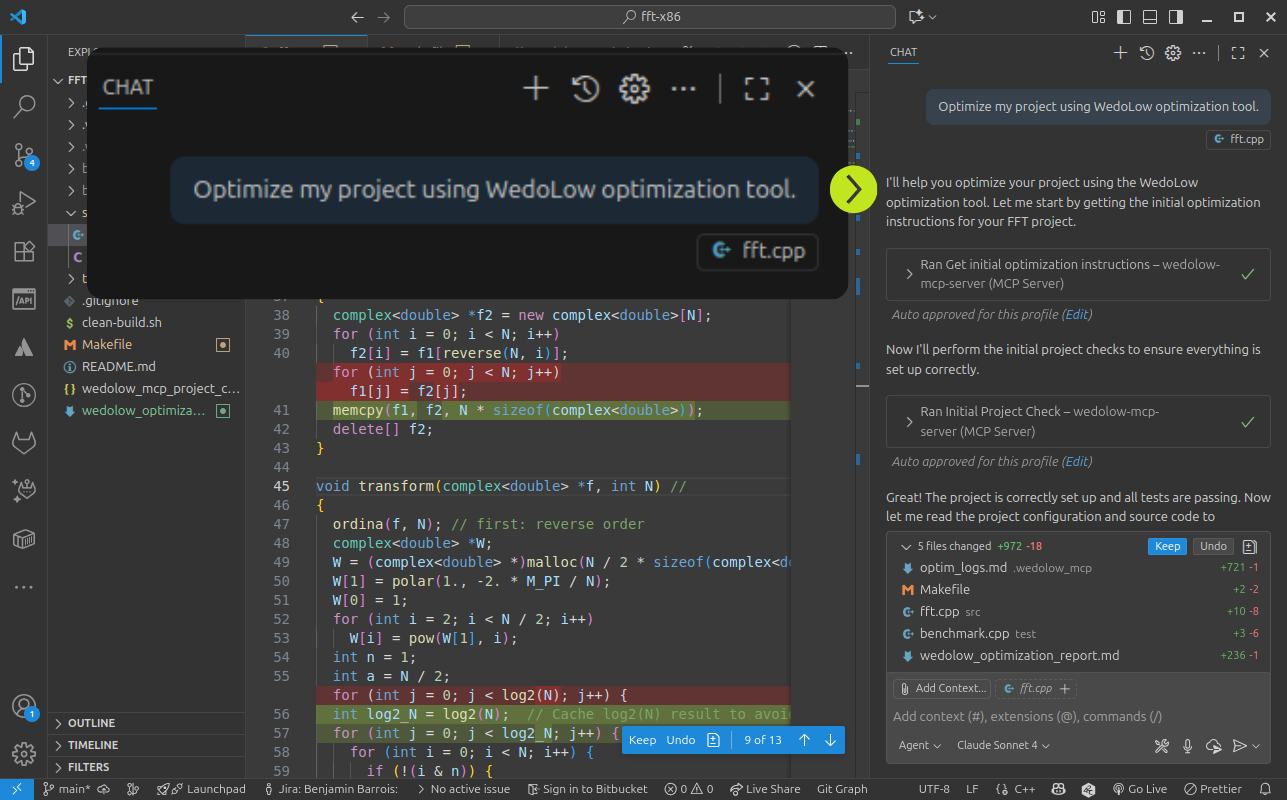

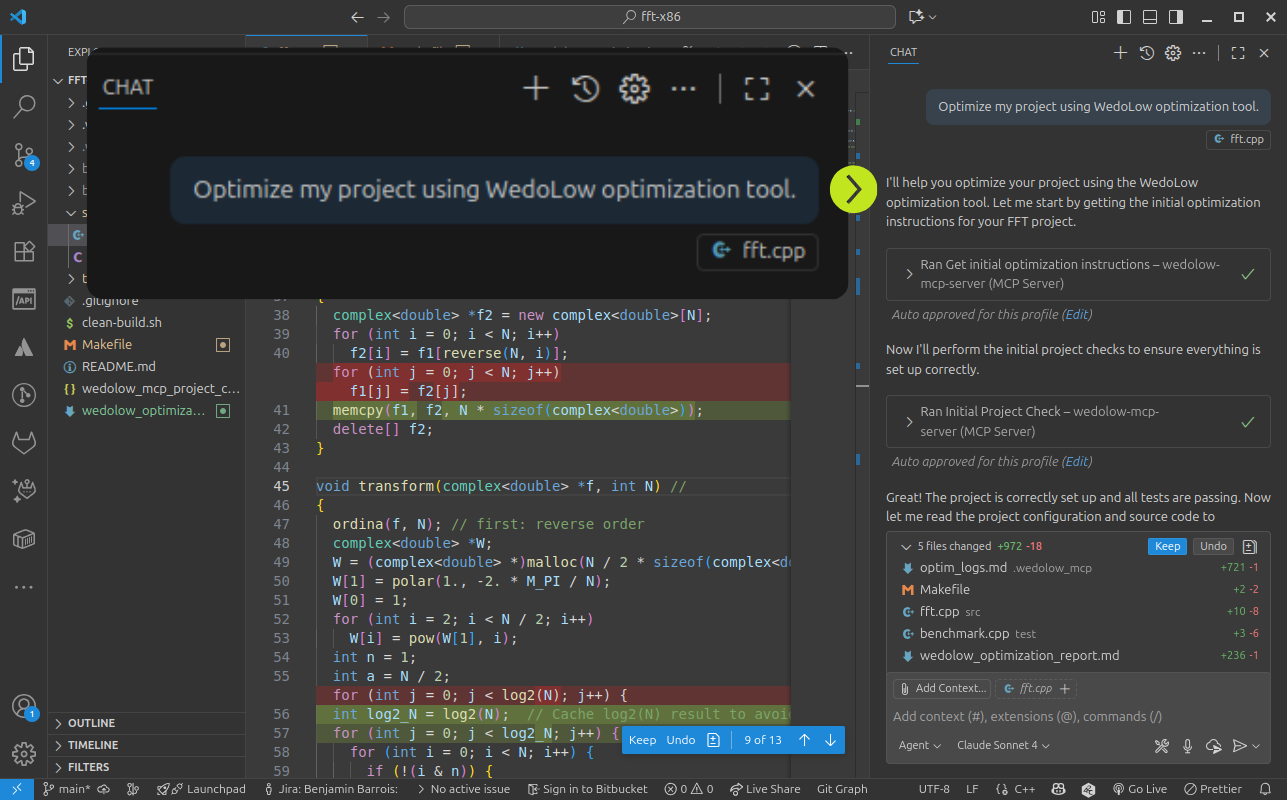

The WedoLow MCP Server: The Missing Link

This is where our Model Context Protocol (MCP) server becomes a game-changer. Unlike AI code generators working in isolation, the WedoLow MCP server connects AI directly to your development environment.

With the WedoLow MCP server, AI can:

- Access your project structure and dependencies

- Run compilation and analysis tools on your actual code

- Gather performance metrics from your target hardware

- Iterate optimizations based on real-world data

The WedoLow MCP Server transforms AI from a code suggestion tool into an intelligent embedded development partner.

Turning AI Code into Reliable Embedded Software

The WedoLow MCP Server bridges the gap between AI code generation and embedded performance demands. It enables developers to:

- Generate and analyze compilation databases

- Examine assembly and performance metrics

- Collect real latency, memory, and CPU data

- Suggest hardware-specific optimizations

- Validate improvements through measurable performance metrics

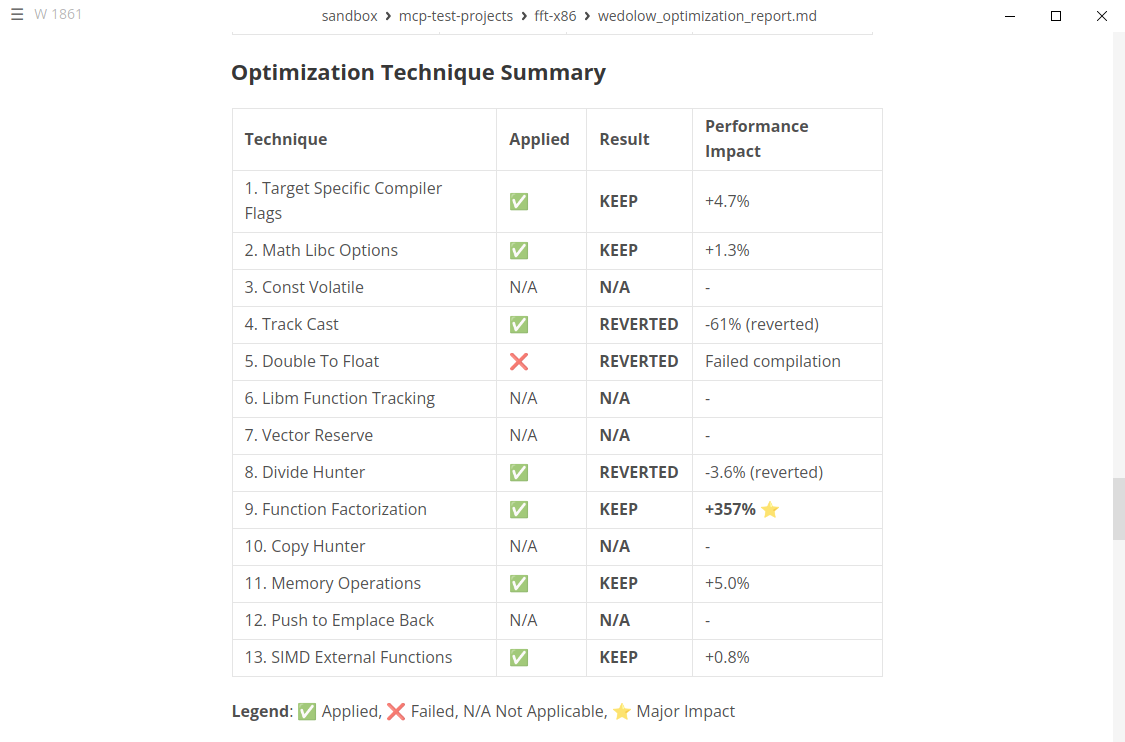

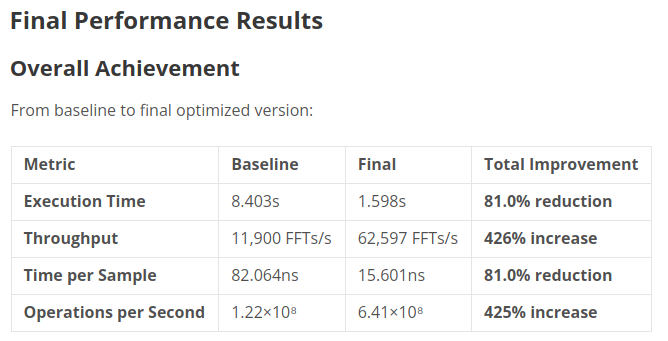

Internal testing has shown:

- 89% average improvements on FFT algorithm (tested across 5 independent runs), when only 19% success rates with traditional AI

- 30% performance improvement on image processing project, reducing the latency from 369ms to 261ms

How Simple It Is to Get Started

- Install the beta package (provided)

- Configure your embedded project

- Ask your AI assistant: “Optimize this sensor reading loop for low power using beLow.”

- Get optimized code and professional-grade performance reports

Exclusive Beta Program Benefits

- Free 30-day access to the full platform

- Priority support from embedded optimization experts

- Influence on the final product roadmap

- Early adopter pricing at launch

- Private Slack community with embedded developers and optimization experts

- Case study opportunities to showcase your success

Ready to test AI-powered embedded optimization? [Apply for Beta Access Today]

Supported Platforms & Compatibility

- Target processors: ARM Cortex-M/A/R, Infineon TriCore, NXP PowerPC, Intel Skylake

- Compilers: GCC, Clang, IAR, HighTec

- Build systems: CMake, Makefiles, STM32CubeIDE, IAR, Bazel

- Operating Systems: Windows 10/11, Ubuntu 20.04–24.04, RHEL 8

How to Join the Beta

Launch Date: October 1, 2025

Duration: 30+ days

Eligibility: Embedded C/C++ developers working on real-time or resource-constrained systems

Requirements:

- Test the WedoLow MCP Server on at least one embedded project

- Provide feedback via Slack

- Maintain confidentiality during beta period

The Future of Embedded AI Is Here

Don’t settle for “almost right” AI-generated code. The WedoLow MCP Server empowers embedded developers to optimize, validate, and accelerate C/C++ projects with AI – turning code generation into a reliable, high-performance partner.

Questions? Contact us at support@wedolow.com

.svg)

.svg)